Edge AI application design based on 5CEFA9F23I7N: FPGA and embedded processor fusion

Creating smart systems at the edge requires speed, efficiency, and flexibility, which is why 'Edge AI application design based on 5CEFA9F23I7N: FPGA and embedded processor fusion' is becoming increasingly important. The combination of FPGA and embedded processors helps meet these needs, allowing for quick decisions and low delays that are essential for edge systems.

Recent reports highlight this transformation. The global edge AI hardware market is projected to grow from $8 billion in 2023 to $43 billion by 2033, with a yearly growth rate of 19.2%. This increase indicates a rising adoption of FPGA-based tools in edge computing. The 5CEFA9F23I7N platform plays a crucial role in this advancement, providing the flexibility and power that edge AI systems demand.

Key Takeaways

FPGAs are flexible and use less energy, making them great for edge AI tasks needing fast processing and low power.

Mixing FPGAs with embedded processors boosts speed and cuts delays, which is important for quick decisions in real-time.

The 5CEFA9F23I7N platform shows how combining FPGA and processors can handle modern edge AI needs with speed and flexibility.

Using FPGAs saves money and helps the environment since they use less energy than regular processors.

Choosing FPGA technology gets systems ready for future AI changes, lasting longer and handling new tasks without big redesigns.

Understanding FPGAs in Edge Computing

What are FPGAs and their role in edge AI?

Field-programmable gate arrays (FPGAs) are special computer chips. You can program them to do specific tasks. Unlike regular processors, FPGAs can be changed to fit your needs. This makes them great for edge AI, where speed and low delay are important.

FPGAs have three main parts: logic blocks, I/O pads, and connections. Logic blocks are the brain, running custom instructions. I/O pads let the FPGA talk to other devices. Connections link everything together to make it work.

FPGAs are great for handling data quickly and saving energy. They are perfect for edge devices that need fast processing without using too much power.

Key features of FPGAs for edge computing

FPGAs have features that make them work well in edge computing. These include being flexible, saving energy, handling different tasks, having fixed pipelines, and staying cool. Each feature helps edge devices work better.

Feature | How It Helps in Edge Computing |

|---|---|

Flexible for algorithms | Works with many tasks and programs |

Saves energy | Uses less power, good for small devices |

Handles different tasks | Manages many jobs at once |

Fixed pipeline | Works fast with no delays |

Stays cool | Needs less cooling, saving money |

These features make FPGAs reliable and efficient for edge computing.

Why FPGAs are critical for edge AI applications

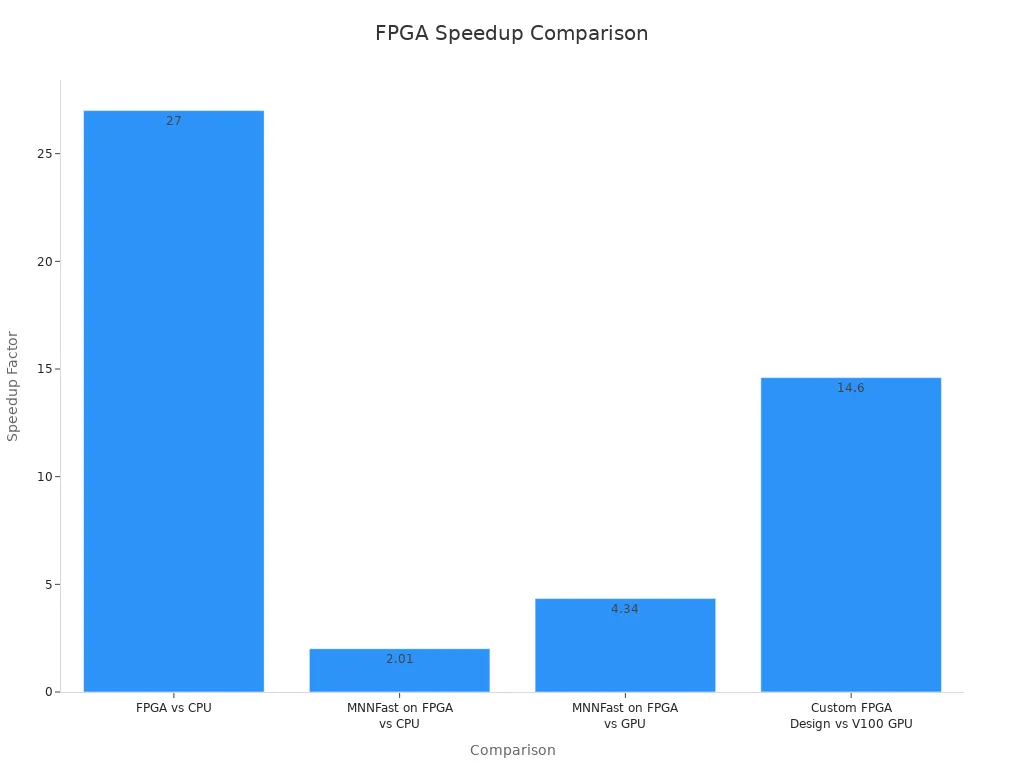

FPGAs are very important for edge AI because they are fast and save energy. Compared to CPUs, FPGAs are 27 times faster and 81 times more energy-efficient. They also use 8.8 times less energy than GPUs. This makes them the best choice for edge AI systems that need quick decisions and low power.

Comparison | Speed Advantage | Energy Efficiency Advantage |

|---|---|---|

FPGA vs CPU | 27x | 81x |

FPGA vs GPU | N/A | 8.8x |

MNNFast on FPGA vs CPU | 2.01x | 6.54x |

MNNFast on FPGA vs GPU | 4.34x | N/A |

Custom FPGA Design vs V100 GPU | 14.6x | N/A |

Using FPGAs gives the speed and efficiency needed for advanced edge AI. They are used in things like self-driving cars and smart cities.

FPGA and Embedded Processor Fusion for Edge AI

The idea of combining embedded processors

Embedded processor fusion means using FPGAs and processors together. Each part has its own strengths. FPGAs are great for fast tasks and handling data quickly. Processors are better for running hard programs and managing systems.

This mix helps edge AI work better. It processes data near where it is made. This reduces the need for fast internet and keeps data safer. Processors with AI tools can handle data instantly, cutting delays and internet use. This is very helpful in cars and robots that need quick decisions.

Think of sensor fusion like your senses working together. Each sense gives different info, but together they help you understand things. Similarly, processor fusion combines tech to make edge AI smarter.

How combining FPGAs and processors improves edge AI

Using FPGAs and processors together makes edge AI stronger. FPGAs do fast tasks with no delay. Processors handle hard math and system jobs. Together, they make a strong and smart system.

Feature | Benefit |

|---|---|

Fast Processing | FPGAs handle quick tasks, perfect for edge AI. |

Low Delays | The system works fast, improving AI response times. |

Custom Tasks | FPGAs can be set up for specific jobs, boosting performance. |

Smart Task Sharing | FPGAs and processors share jobs, making the system work better. |

This combo also helps with real-time image fixes and custom AI tools. By putting all this tech on one board, it saves space and works better. The result is a small, powerful system for AI tasks.

The importance of 5CEFA9F23I7N in edge AI

The 5CEFA9F23I7N platform shows how FPGAs and processors can work together. It mixes FPGA flexibility with processor power, making it great for many edge AI uses. It handles data fast and uses little energy, which is key for edge devices.

This platform can also be updated as AI needs change. It has special AI tools to handle modern AI tasks. Whether for smart cars, cities, or medical tools, this platform gives you what you need to succeed.

Key Benefits of FPGA-Accelerated Edge AI

Real-time processing and low latency

FPGA-powered edge AI works super fast in real-time. FPGAs are great for tasks needing quick actions, like checking sensors or spotting problems. They process data at the same time, reducing delays. This makes them perfect for devices where speed is critical.

Benefit | Description |

|---|---|

Faster Processing Speeds | FPGAs work faster than regular CPUs, helping decisions happen quickly. |

Energy Efficiency | FPGAs use less power, saving money for constant monitoring. |

Real-Time Data Processing | FPGAs handle sensor data instantly, helping find problems or predict issues fast. |

Using FPGA-powered edge AI means quicker decisions and better system speed. This is super helpful for things like self-driving cars and factory machines, where delays can cause big problems.

Energy efficiency and sustainability

FPGAs are built to save energy, making them eco-friendly for edge computing. Unlike normal processors, FPGAs use less power but still perform well. They work best when tasks are short or when one device runs many programs.

FPGAs are better than ASICs when:

Tasks last less than 1.6 years.

The FPGA is used for five or more tasks.

Less than 2 million units are needed for similar performance.

Xilinx™ FPGAs are over 100 times better in speed and energy use than older systems.

Saving energy with FPGAs lowers costs and helps meet the need for green tech in edge AI.

Scalability and adaptability for diverse workloads

FPGAs can grow and change to fit many AI tasks. Their design lets you adjust them for specific jobs, making them work well in different areas.

FPGAs can be up to 100 times faster for tasks like text searches or machine learning.

They can cut power use by 10 times, which is great for devices with limited energy.

This flexibility makes FPGAs useful in many places, like smart cities or medical tools. Their ability to handle many tasks at once helps them manage tough AI jobs, opening doors for new edge AI ideas.

Long deployment lifetimes and hardware customization.

Edge AI systems need to last many years without constant updates. FPGAs are great for this because they can change as needed. Unlike regular processors, FPGAs let you reprogram the hardware itself. This keeps your system useful even when AI models improve.

Customizing hardware with FPGAs gives big advantages. You can design it for specific tasks, like quick decisions or handling lots of data. This boosts performance and saves energy. For example, fixed models on custom FPGA designs often work faster and use less power than GPUs.

Advantage | How It Helps |

|---|---|

Speed and Efficiency | Custom hardware beats GPUs in speed and energy use for fixed models. |

Predictable Performance | FPGAs give steady results, perfect for real-time tasks. |

Scalability and Parallelism | Custom designs handle big models and data well, improving speed. |

Cost Savings for Large Systems | Custom FPGA setups lower costs for big services. |

Tip: Use hardware customization to make your system fit specific tasks. This keeps it fast and cost-effective over time.

FPGAs are also reliable for critical tasks. They give steady results, which is key for things like self-driving cars or factory machines. Their ability to grow lets them handle more work without needing a full system upgrade.

Choosing FPGA-based solutions means investing in tech that lasts and adapts. This makes them a smart pick for edge AI systems needing long-term use and efficiency.

Challenges of Using FPGAs in Edge AI Systems

Complexity of FPGA programming and integration

Setting up FPGAs for edge computing is not easy. You must handle many tricky parts to make the system work well. These include:

Robustness: Making sure the AI works even with strange inputs.

Resource Management: Using the limited space on FPGAs wisely.

Timing Constraints: Meeting strict time rules for real-time tasks.

Debugging Challenges: Fixing hardware problems, which can take a lot of time.

These tasks need strong knowledge of hardware and software. Unlike regular processors, FPGAs need special skills to set up and improve. This makes development slower and increases the chance of mistakes.

Higher initial costs and resource requirements

Starting with FPGA-based edge AI systems can cost a lot. You need to think about several things when planning your budget:

Using more than one FPGA raises costs and makes things harder.

To avoid delays, keep FPGA usage below 50%. Planning for extra resources helps reduce risks.

Boards for testing and software tools add to the overall cost.

Hiring skilled workers is also expensive. Experts are hard to find, and this can slow your project. Working with specialists can help plan better, but it adds to the price. Even with high costs, FPGAs often pay off in the long run.

Limited availability of skilled developers

Finding developers who know both AI and FPGA tech is tough. This lack of talent makes building FPGA-based edge AI systems harder.

Evidence Type | Description |

|---|---|

High Development Costs | Paying for skilled workers and tools can be too expensive for some teams. |

Skill Shortages | Few people are trained in both AI and FPGA technologies. |

This problem raises costs and slows down new ideas. You might need to train workers or team up with experts to solve this issue. Fixing this talent gap is key to growing edge computing systems.

Longer development cycles compared to other technologies.

Working with FPGAs in edge AI takes more time. This is because FPGAs need special skills and knowledge. Unlike regular processors, you must program the hardware directly. This makes projects take longer.

Programming FPGAs needs both hardware and software skills. Without these, delays can happen.

Changing hardware for new AI models adds more work. While this is useful, it takes extra time to design and test.

Mixing FPGAs with other tech, like processors, makes things harder. All parts must work well together.

Note: FPGAs are flexible and powerful but need more time to develop.

Fixing problems in FPGA systems also takes longer. Unlike fixing software, hardware issues may need design changes. This slows progress. Also, finding skilled workers for FPGAs is tough, making team-building harder.

Even with these challenges, FPGAs are worth it in the long run. They can adjust to new AI needs, keeping systems useful for years. Careful planning and using the right tools can help you handle these challenges and get the best from FPGAs.

Practical Strategies for Edge AI Application Design

Picking the best FPGA model for your needs

Choosing the right FPGA is key for better edge AI systems. Look at performance measures that match your project’s goals. Metrics like FPS per watt, TFLOPs-per-watt, and FLOPS help balance speed and energy use.

Metric | What It Means |

|---|---|

FPS/Pow | Balances how fast it works with how much power it uses. |

TFLOPs-per-watt | Shows how energy-efficient it is, often better in FPGAs than GPUs. |

FLOPS | Measures energy used during tasks, helping compare different systems fairly. |

FPGAs are great for real-time tasks needing fast speeds and low power. Setting them up for specific jobs makes them work even better. Simplifying neural networks by cutting extra parts improves speed and saves energy. This makes FPGAs perfect for things like self-driving cars and smart factories.

Tip: Pick models that can be updated later. This keeps your system ready for new AI tasks.

Using hardware-software co-design to improve systems

Hardware-software co-design connects physical parts with software to make edge AI better. Customizing hardware for your needs boosts performance and makes it easier to scale. Splitting tasks between local devices and servers reduces data sharing, improving privacy and flexibility.

Evidence Description | Co-Design Benefits |

|---|---|

Custom hardware setups | Makes systems faster by focusing on specific tasks. |

Splitting tasks | Reduces data sharing, improving privacy and system growth. |

Real-time task handling | Helps meet quick response needs, like recognizing images fast. |

Sharing parameters | Cuts down on computing work and makes models more flexible. |

Quick tasks, like processing an image in 100 ms, show why co-design matters. Sharing work across tasks lowers computing needs, making systems faster and cheaper. This keeps edge AI systems quick and cost-friendly.

Making FPGA development easier with tools

Programming FPGAs can be hard, but new tools make it simpler. High-level synthesis (HLS) tools let you program FPGAs using C or C++, so you don’t need deep hardware skills. OpenCL-based frameworks from Intel and Xilinx also make development easier.

HLS tools simplify programming by hiding hardware details.

OpenCL tools make it easier to focus on designing applications instead of hardware.

These tools speed up development, making FPGAs more practical for edge AI.

Using these tools helps you build systems faster while keeping FPGAs efficient and flexible. This way, you can create strong edge AI systems without needing to be a hardware expert.

Note: Check out different frameworks to find the best one for your project. This makes it easier to build and launch your system quickly.

Testing and improving for real-world edge AI use.

Testing your edge AI system makes sure it works well. You need to mimic real-world situations and adjust your FPGA-based system for top performance. Here’s how to do it:

Use Real-Life Data

Test with data similar to what your system will handle. For example, if your AI is for video security, try videos in different weather or lighting. This helps find problems early.Push Your System Hard

Test your system by giving it heavy tasks. Check how it works under high data loads and if it stays fast. This shows weak spots in your design so you can fix them.Manage Resources Better

FPGAs have limited space and memory. Check how these are used. Tools like Intel Quartus Prime or Xilinx Vivado can spot waste. Adjust resources to boost performance without using more power.Test in Real Settings

Try your system where it will actually be used. Test it in real conditions like bad internet or very hot or cold places. This ensures it stays strong and reliable.

Tip: Use tools to track speed, data flow, and power use during tests. These details help you improve your system.

Keep Improving

Testing doesn’t stop after launch. Gather feedback and data after deployment. Use this to make your system better and meet new needs.

By doing these steps, your FPGA-powered edge AI system will be ready for real-world tasks.

Real-World Uses of FPGAs in Edge Computing

Industrial IoT and Predictive Maintenance

In factories, predictive maintenance stops machines from breaking down. FPGAs are great for this because they process data quickly. They check machines in real-time to spot early problems. For example, a study with 298 FPGAs over 280 days showed only 0.064% wear in switching frequency. Machine learning used this data to predict future wear with just 0.002% error over 100 days. This accuracy helps factories avoid costly repairs and keep machines running longer.

FPGAs can also analyze big data to find weak spots in machines. This helps workers focus on fixing the right parts. By working locally, FPGAs cut down on internet use, saving bandwidth and making systems more reliable.

Self-Driving Cars and Quick Decisions

Self-driving cars need to make fast decisions to stay safe. FPGAs are key in systems like lane-keeping and emergency braking. They process sensor data quickly, helping cars react in real-time. This reduces accidents by allowing cars to respond to sudden changes, like obstacles or traffic.

FPGAs are super fast, which is important for quick actions. They speed up AI tasks, helping cars work smoothly even in tricky situations.

Smart Cities and Edge Surveillance

Smart cities use edge computing to improve safety and manage resources. FPGAs power cameras that process video instantly. They spot unusual events, like break-ins or traffic problems, and alert authorities quickly.

FPGAs also help with facial recognition and crowd monitoring. They use less energy and can handle many tasks, making them perfect for smart city systems. By doing so, FPGAs help create safer and more efficient cities.

Healthcare and portable diagnostic devices.

Portable medical tools are changing healthcare by making advanced care easier. FPGAs are key in these tools, helping them work faster and better. They are used in areas like medical imaging, quick data processing, and smart healthcare systems.

FPGAs are great for medical imaging, like in CT scanners. They make image processing faster, so doctors can see results right away. This is very important in emergencies when every second matters. Quick results mean faster treatment, which can save lives.

Another benefit of FPGAs is their ability to process data super fast. They handle data in tiny fractions of a second, giving instant results. For example, real-time image processing helps doctors find problems during surgeries. This accuracy improves patient care and lowers mistakes.

FPGAs also make AI and machine learning tasks more efficient. They use less power while working on tough calculations. This makes them perfect for small devices that need to save space and energy. These tools are powerful, energy-saving, and easy to carry.

Did you know? Portable tools with FPGAs can do things like check blood, scan with ultrasound, and monitor hearts quickly and accurately.

Because FPGAs can be updated, these tools can keep up with new medical tech. They are reliable for both small clinics and big hospitals. FPGA-powered tools are shaping the future of healthcare everywhere.

Future Trends in FPGA-Accelerated Edge AI

Improvements in FPGA technology for AI tasks

FPGA technology is growing fast to meet AI needs. Companies like Intel and AMD are making new FPGAs just for AI. These chips are faster and use less energy. Partnerships between chipmakers and software companies are helping too. They are building special FPGAs for industries like healthcare and cars.

Governments are also funding research to make better FPGAs. This reduces the need to rely on other countries for tech. Low-energy FPGAs are another cool update. These chips handle AI tasks while saving power, which is great for eco-friendly systems. Easier-to-use tools are also being created. These tools help engineers build AI systems faster.

Improvement Type | What It Does |

|---|---|

New AI FPGAs | Intel and AMD are making faster, energy-saving FPGAs for AI. |

Teamwork Innovations | Chip and software companies are teaming up to create custom FPGAs for different industries. |

Energy-Saving Designs | New FPGAs use less power while running AI tasks, helping the environment. |

Government Research Support | Governments are funding FPGA research to boost innovation and reduce tech imports. |

Better Development Tools | User-friendly tools are helping engineers design AI systems more easily and quickly. |

Adding AI-specific features to FPGAs

Modern FPGAs now have special parts for AI tasks. These parts speed up things like neural networks and real-time data work. With these features, FPGAs can handle tough AI jobs faster. This makes them great for edge systems where speed matters, like self-driving cars and smart cities.

Open-source tools for easier FPGA programming

Open-source tools are making FPGA programming simpler. Platforms like OpenCL and HLS let you program FPGAs without needing to know hardware deeply. These tools make it easier to build and launch AI systems quickly. Open-source projects also let developers share ideas and improve designs together. This trend is making FPGAs easier to use and more popular for edge AI systems.

The changing role of FPGAs in future edge AI systems.

FPGAs are changing how edge AI systems work. They are becoming more important as they adjust to new technology needs. These chips are no longer just parts of hardware; they are now key tools for speeding up AI and machine learning tasks at the edge.

AI and Machine Learning Acceleration: Companies are building FPGA-based AI tools. These tools handle tough AI tasks faster than regular processors. For example, FPGAs can run neural networks quickly, making them great for self-driving cars and smart cameras.

Teamwork with Other Processors: FPGAs now work with CPUs and GPUs. Each processor does what it’s best at. FPGAs process data fast, CPUs control systems, and GPUs handle graphics. This teamwork makes edge AI systems faster and better.

Easier to Use: New programming tools make FPGAs simpler to use. High-level tools let you program them without needing to know a lot about hardware. This makes more engineers want to use FPGAs for edge computing.

Tip: If you’re working on edge AI, think about using FPGAs. They can make your system faster and more flexible, handling many tasks easily.

FPGAs are becoming a big part of edge AI. Their speed, flexibility, and low energy use make them perfect for future systems. Using FPGAs can help you create smarter and faster edge AI solutions.

FPGAs offer big benefits for edge AI, like faster speeds, better scalability, and saving energy. For instance, they boost performance by 1.6× and improve scalability by 2.5× with 32 nodes. They handle many tasks at once, cutting delays and speeding up data work. This makes them perfect for real-time uses.

Metric | Value |

|---|---|

Performance Boost | 1.6× better in early tests |

Scalability Growth | 2.5× better with 32 nodes |

Lower Delays | Handles tasks at the same time |

Faster Data Work | Processes more data quickly |

Energy Saving | Uses less power |

Using FPGAs with embedded processors makes these systems even stronger. They can adjust to new AI needs, making them great for future-ready edge AI projects.

FAQ

Why are FPGAs better than CPUs or GPUs for edge AI?

FPGAs are faster and use less energy. They handle many tasks at once, making them great for real-time uses like smart cars or factory systems.

Can FPGAs change for new AI models?

Yes, FPGAs can be reprogrammed for new AI needs. This means you don’t need to replace the whole system. It saves time and money while keeping your setup updated.

Are FPGAs good for small gadgets?

FPGAs are perfect for small devices because they use little power. They stay cool and work well in tools like smart cameras or portable medical devices.

How do FPGA tools make building easier?

Tools like HLS and OpenCL let you use simple coding languages like C. These tools make it easier to design systems without worrying about hardware details.

Is learning FPGA programming hard?

FPGA programming can be tricky at first. But new tools make it simpler. With practice, you can create strong systems for edge AI.

See Also

Diving Into The Specifications Of EP4CE6E22C8N FPGA

Harnessing The Video Processing Capabilities Of GS2972-IBE3

Transforming Embedded Memory With H9JCNNNBK3MLYR-N6E Technology